[ToolCallItem(agent=Agent(name='Code Agent', instructions="\nYou solve tasks using an agentic coding loop in Python.\n\nFollow this loop carefully:\n\n- Think and write code. Send the code to the execute_code tool.\n- Get code output results back.\n- Think again, improve or add more code.\n- Get code output results back.\n...\n- Repeat until you've solved the problem completely.\n\nIf you encounter any errors:\n\n- FIX THEM and continue the loop.\n- If modules are not found, install them using: !pip install \n\nNever give up. Continue iterating until the task is fully solved.\nThe sandbox environment is safe, isolated, and supports running arbitrary Python code.\nState is maintained between code snippets:\nVariables and definitions persist across executions.\n# first code snippet\nx = 2\nprint(x)\n# second code snippet in separate request\ny = 6\nprint(x + y) # This works because state persists\n\nBegin your agentic coding loop!\n\n", handoff_description=None, handoffs=[], model='gpt-4o', model_settings=ModelSettings(temperature=None, top_p=None, frequency_penalty=None, presence_penalty=None, tool_choice=None, parallel_tool_calls=False, truncation=None, max_tokens=None), tools=[FunctionTool(name='execute_code', description='Execute the given Python code in a sandboxed environment and return the output.', params_json_schema={'properties': {'code': {'title': 'Code', 'type': 'string'}}, 'required': ['code'], 'title': 'execute_code_args', 'type': 'object', 'additionalProperties': False}, on_invoke_tool=._create_function_tool.._on_invoke_tool at 0x10d8ea700>, strict_json_schema=True), WebSearchTool(user_location=None, search_context_size='medium')], mcp_servers=[], input_guardrails=[], output_guardrails=[], output_type=None, hooks=None, tool_use_behavior='run_llm_again', reset_tool_choice=True), raw_item=ResponseFunctionWebSearch(id='ws_67edcd295da481919095a35d1770ce060e2583e709c47d03', status='completed', type='web_search_call'), type='tool_call_item'),

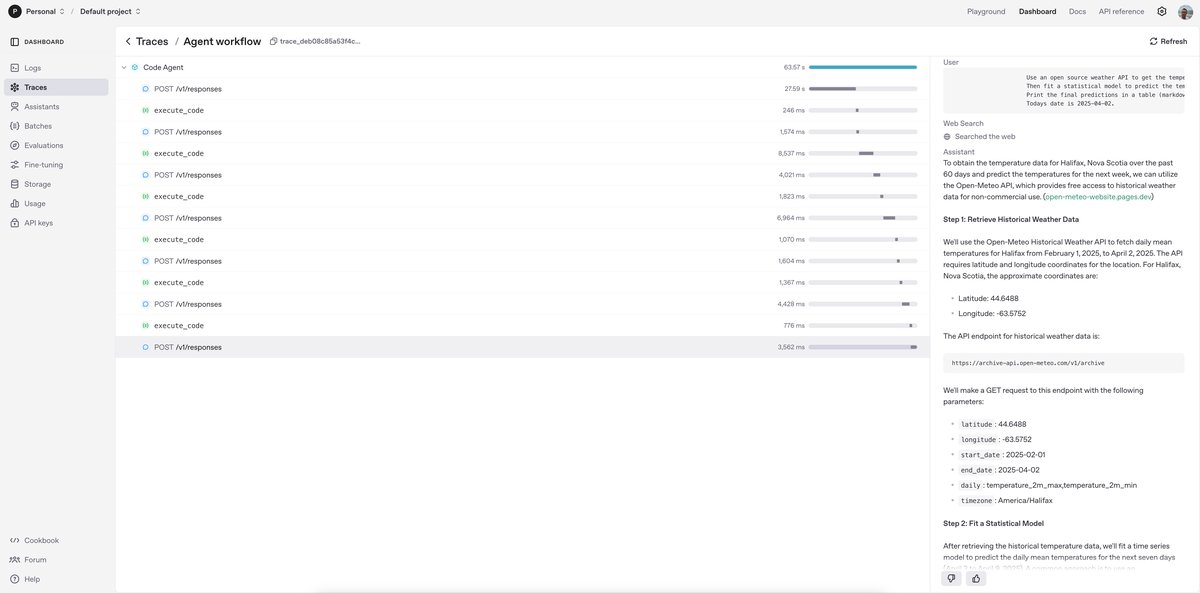

MessageOutputItem(agent=Agent(name='Code Agent', instructions="\nYou solve tasks using an agentic coding loop in Python.\n\nFollow this loop carefully:\n\n- Think and write code. Send the code to the execute_code tool.\n- Get code output results back.\n- Think again, improve or add more code.\n- Get code output results back.\n...\n- Repeat until you've solved the problem completely.\n\nIf you encounter any errors:\n\n- FIX THEM and continue the loop.\n- If modules are not found, install them using: !pip install \n\nNever give up. Continue iterating until the task is fully solved.\nThe sandbox environment is safe, isolated, and supports running arbitrary Python code.\nState is maintained between code snippets:\nVariables and definitions persist across executions.\n# first code snippet\nx = 2\nprint(x)\n# second code snippet in separate request\ny = 6\nprint(x + y) # This works because state persists\n\nBegin your agentic coding loop!\n\n", handoff_description=None, handoffs=[], model='gpt-4o', model_settings=ModelSettings(temperature=None, top_p=None, frequency_penalty=None, presence_penalty=None, tool_choice=None, parallel_tool_calls=False, truncation=None, max_tokens=None), tools=[FunctionTool(name='execute_code', description='Execute the given Python code in a sandboxed environment and return the output.', params_json_schema={'properties': {'code': {'title': 'Code', 'type': 'string'}}, 'required': ['code'], 'title': 'execute_code_args', 'type': 'object', 'additionalProperties': False}, on_invoke_tool=._create_function_tool.._on_invoke_tool at 0x10d8ea700>, strict_json_schema=True), WebSearchTool(user_location=None, search_context_size='medium')], mcp_servers=[], input_guardrails=[], output_guardrails=[], output_type=None, hooks=None, tool_use_behavior='run_llm_again', reset_tool_choice=True), raw_item=ResponseOutputMessage(id='msg_67edcd2b183081919aae61ed7d739ca60e2583e709c47d03', content=[ResponseOutputText(annotations=[AnnotationURLCitation(end_index=358, start_index=239, title='🏛️ Historical Weather API | Open-Meteo.com', type='url_citation', url='https://open-meteo-website.pages.dev/en/docs/historical-weather-api?utm_source=openai')], text='To obtain the temperature data for Halifax, Nova Scotia over the past 60 days and predict the temperatures for the next week, we can utilize the Open-Meteo API, which provides free access to historical weather data for non-commercial use. ([open-meteo-website.pages.dev](https://open-meteo-website.pages.dev/en/docs/historical-weather-api?utm_source=openai))\n\n**Step 1: Retrieve Historical Weather Data**\n\nWe\'ll use the Open-Meteo Historical Weather API to fetch daily mean temperatures for Halifax from February 1, 2025, to April 2, 2025. The API requires latitude and longitude coordinates for the location. For Halifax, Nova Scotia, the approximate coordinates are:\n\n- Latitude: 44.6488\n- Longitude: -63.5752\n\nThe API endpoint for historical weather data is:\n\n```\nhttps://archive-api.open-meteo.com/v1/archive\n```\n\nWe\'ll make a GET request to this endpoint with the following parameters:\n\n- `latitude`: 44.6488\n- `longitude`: -63.5752\n- `start_date`: 2025-02-01\n- `end_date`: 2025-04-02\n- `daily`: temperature_2m_max,temperature_2m_min\n- `timezone`: America/Halifax\n\n**Step 2: Fit a Statistical Model**\n\nAfter retrieving the historical temperature data, we\'ll fit a time series model to predict the daily mean temperatures for the next seven days (April 3 to April 9, 2025). A common approach is to use an Autoregressive Integrated Moving Average (ARIMA) model, which is suitable for time series forecasting.\n\n**Step 3: Generate Predictions**\n\nUsing the fitted ARIMA model, we\'ll forecast the daily mean temperatures for the specified dates.\n\n**Step 4: Display Predictions in a Markdown Table**\n\nFinally, we\'ll present the predicted temperatures in a markdown-formatted table for clarity.\n\nLet\'s proceed with the implementation:\n\n```python\nimport requests\nimport pandas as pd\nfrom statsmodels.tsa.arima.model import ARIMA\nimport matplotlib.pyplot as plt\n\n# Step 1: Retrieve Historical Weather Data\nlatitude = 44.6488\nlongitude = -63.5752\nstart_date = "2025-02-01"\nend_date = "2025-04-02"\ntimezone = "America/Halifax"\n\nurl = f"https://archive-api.open-meteo.com/v1/archive?latitude={latitude}&longitude={longitude}&start_date={start_date}&end_date={end_date}&daily=temperature_2m_max,temperature_2m_min&timezone={timezone}"\n\nresponse = requests.get(url)\ndata = response.json()\n\n# Extract dates and temperatures\ndates = data[\'daily\'][\'time\']\ntemp_max = data[\'daily\'][\'temperature_2m_max\']\ntemp_min = data[\'daily\'][\'temperature_2m_min\']\n\n# Calculate mean temperatures\ntemp_mean = [(max_temp + min_temp) / 2 for max_temp, min_temp in zip(temp_max, temp_min)]\n\n# Create a DataFrame\ndf = pd.DataFrame({\'Date\': pd.to_datetime(dates), \'Mean_Temperature\': temp_mean})\n\n# Step 2: Fit a Statistical Model\n# Set the date as the index\ndf.set_index(\'Date\', inplace=True)\n\n# Fit an ARIMA model\nmodel = ARIMA(df[\'Mean_Temperature\'], order=(5, 1, 0)) # ARIMA(p, d, q)\nmodel_fit = model.fit()\n\n# Step 3: Generate Predictions\nforecast = model_fit.forecast(steps=7)\nforecast_dates = pd.date_range(start=df.index[-1] + pd.Timedelta(days=1), periods=7)\n\n# Step 4: Display Predictions in a Markdown Table\nforecast_df = pd.DataFrame({\'Date\': forecast_dates, \'Predicted_Mean_Temperature\': forecast})\nforecast_df.reset_index(drop=True, inplace=True)\n\n# Convert DataFrame to markdown table\nmarkdown_table = forecast_df.to_markdown(index=False)\n\nprint(markdown_table)\n```\n\n**Note**: Ensure that the required libraries (`requests`, `pandas`, `statsmodels`, and `matplotlib`) are installed in your Python environment. You can install them using pip if they are not already installed:\n\n```\npip install requests pandas statsmodels matplotlib\n```\n\nThis script will output a markdown-formatted table with the predicted mean temperatures for Halifax, Nova Scotia, from April 3 to April 9, 2025. ', type='output_text')], role='assistant', status='completed', type='message'), type='message_output_item'),

ToolCallItem(agent=Agent(name='Code Agent', instructions="\nYou solve tasks using an agentic coding loop in Python.\n\nFollow this loop carefully:\n\n- Think and write code. Send the code to the execute_code tool.\n- Get code output results back.\n- Think again, improve or add more code.\n- Get code output results back.\n...\n- Repeat until you've solved the problem completely.\n\nIf you encounter any errors:\n\n- FIX THEM and continue the loop.\n- If modules are not found, install them using: !pip install \n\nNever give up. Continue iterating until the task is fully solved.\nThe sandbox environment is safe, isolated, and supports running arbitrary Python code.\nState is maintained between code snippets:\nVariables and definitions persist across executions.\n# first code snippet\nx = 2\nprint(x)\n# second code snippet in separate request\ny = 6\nprint(x + y) # This works because state persists\n\nBegin your agentic coding loop!\n\n", handoff_description=None, handoffs=[], model='gpt-4o', model_settings=ModelSettings(temperature=None, top_p=None, frequency_penalty=None, presence_penalty=None, tool_choice=None, parallel_tool_calls=False, truncation=None, max_tokens=None), tools=[FunctionTool(name='execute_code', description='Execute the given Python code in a sandboxed environment and return the output.', params_json_schema={'properties': {'code': {'title': 'Code', 'type': 'string'}}, 'required': ['code'], 'title': 'execute_code_args', 'type': 'object', 'additionalProperties': False}, on_invoke_tool=._create_function_tool.._on_invoke_tool at 0x10d8ea700>, strict_json_schema=True), WebSearchTool(user_location=None, search_context_size='medium')], mcp_servers=[], input_guardrails=[], output_guardrails=[], output_type=None, hooks=None, tool_use_behavior='run_llm_again', reset_tool_choice=True), raw_item=ResponseFunctionToolCall(arguments='{"code":"import requests\\nimport pandas as pd\\nfrom statsmodels.tsa.arima.model import ARIMA\\n\\n# Step 1: Retrieve Historical Weather Data\\nlatitude = 44.6488\\nlongitude = -63.5752\\nstart_date = \\"2025-02-01\\"\\nend_date = \\"2025-04-02\\"\\ntimezone = \\"America/Halifax\\"\\n\\nurl = f\\"https://archive-api.open-meteo.com/v1/archive?latitude={latitude}&longitude={longitude}&start_date={start_date}&end_date={end_date}&daily=temperature_2m_max,temperature_2m_min&timezone={timezone}\\"\\n\\nresponse = requests.get(url)\\ndata = response.json()\\n\\n# Extract dates and temperatures\\ndates = data[\'daily\'][\'time\']\\ntemp_max = data[\'daily\'][\'temperature_2m_max\']\\ntemp_min = data[\'daily\'][\'temperature_2m_min\']\\n\\n# Calculate mean temperatures\\ntemp_mean = [(max_temp + min_temp) / 2 for max_temp, min_temp in zip(temp_max, temp_min)]\\n\\n# Create a DataFrame\\ndf = pd.DataFrame({\'Date\': pd.to_datetime(dates), \'Mean_Temperature\': temp_mean})\\n\\n# Step 2: Fit a Statistical Model\\n# Set the date as the index\\ndf.set_index(\'Date\', inplace=True)\\n\\n# Fit an ARIMA model\\nmodel = ARIMA(df[\'Mean_Temperature\'], order=(5, 1, 0)) # ARIMA(p, d, q)\\nmodel_fit = model.fit()\\n\\n# Step 3: Generate Predictions\\nforecast = model_fit.forecast(steps=7)\\nforecast_dates = pd.date_range(start=df.index[-1] + pd.Timedelta(days=1), periods=7)\\n\\n# Step 4: Display Predictions in a Markdown Table\\nforecast_df = pd.DataFrame({\'Date\': forecast_dates, \'Predicted_Mean_Temperature\': forecast})\\nforecast_df.reset_index(drop=True, inplace=True)\\n\\n# Convert DataFrame to markdown table\\nmarkdown_table = forecast_df.to_markdown(index=False)\\nmarkdown_table"}', call_id='call_VAfl2mzns1fu8Qwuyq5qsS3X', name='execute_code', type='function_call', id='fc_67edcd31b0b08191b7560341e4391ec00e2583e709c47d03', status='completed'), type='tool_call_item'),

ToolCallOutputItem(agent=Agent(name='Code Agent', instructions="\nYou solve tasks using an agentic coding loop in Python.\n\nFollow this loop carefully:\n\n- Think and write code. Send the code to the execute_code tool.\n- Get code output results back.\n- Think again, improve or add more code.\n- Get code output results back.\n...\n- Repeat until you've solved the problem completely.\n\nIf you encounter any errors:\n\n- FIX THEM and continue the loop.\n- If modules are not found, install them using: !pip install \n\nNever give up. Continue iterating until the task is fully solved.\nThe sandbox environment is safe, isolated, and supports running arbitrary Python code.\nState is maintained between code snippets:\nVariables and definitions persist across executions.\n# first code snippet\nx = 2\nprint(x)\n# second code snippet in separate request\ny = 6\nprint(x + y) # This works because state persists\n\nBegin your agentic coding loop!\n\n", handoff_description=None, handoffs=[], model='gpt-4o', model_settings=ModelSettings(temperature=None, top_p=None, frequency_penalty=None, presence_penalty=None, tool_choice=None, parallel_tool_calls=False, truncation=None, max_tokens=None), tools=[FunctionTool(name='execute_code', description='Execute the given Python code in a sandboxed environment and return the output.', params_json_schema={'properties': {'code': {'title': 'Code', 'type': 'string'}}, 'required': ['code'], 'title': 'execute_code_args', 'type': 'object', 'additionalProperties': False}, on_invoke_tool=._create_function_tool.._on_invoke_tool at 0x10d8ea700>, strict_json_schema=True), WebSearchTool(user_location=None, search_context_size='medium')], mcp_servers=[], input_guardrails=[], output_guardrails=[], output_type=None, hooks=None, tool_use_behavior='run_llm_again', reset_tool_choice=True), raw_item={'call_id': 'call_VAfl2mzns1fu8Qwuyq5qsS3X', 'output': '{\'stdout\': "---------------------------------------------------------------------------\\nModuleNotFoundError Traceback (most recent call last)\\nFile :1\\n----> 1 import requests\\n 2 import pandas as pd\\n 3 from statsmodels.tsa.arima.model import ARIMA\\n\\nModuleNotFoundError: No module named \'requests\'\\n", \'stderr\': \'\', \'success\': False, \'result\': None, \'error\': "No module named \'requests\'"}', 'type': 'function_call_output'}, output={'stdout': "---------------------------------------------------------------------------\nModuleNotFoundError Traceback (most recent call last)\nFile :1\n----> 1 import requests\n 2 import pandas as pd\n 3 from statsmodels.tsa.arima.model import ARIMA\n\nModuleNotFoundError: No module named 'requests'\n", 'stderr': '', 'success': False, 'result': None, 'error': "No module named 'requests'"}, type='tool_call_output_item'),

ToolCallItem(agent=Agent(name='Code Agent', instructions="\nYou solve tasks using an agentic coding loop in Python.\n\nFollow this loop carefully:\n\n- Think and write code. Send the code to the execute_code tool.\n- Get code output results back.\n- Think again, improve or add more code.\n- Get code output results back.\n...\n- Repeat until you've solved the problem completely.\n\nIf you encounter any errors:\n\n- FIX THEM and continue the loop.\n- If modules are not found, install them using: !pip install \n\nNever give up. Continue iterating until the task is fully solved.\nThe sandbox environment is safe, isolated, and supports running arbitrary Python code.\nState is maintained between code snippets:\nVariables and definitions persist across executions.\n# first code snippet\nx = 2\nprint(x)\n# second code snippet in separate request\ny = 6\nprint(x + y) # This works because state persists\n\nBegin your agentic coding loop!\n\n", handoff_description=None, handoffs=[], model='gpt-4o', model_settings=ModelSettings(temperature=None, top_p=None, frequency_penalty=None, presence_penalty=None, tool_choice=None, parallel_tool_calls=False, truncation=None, max_tokens=None), tools=[FunctionTool(name='execute_code', description='Execute the given Python code in a sandboxed environment and return the output.', params_json_schema={'properties': {'code': {'title': 'Code', 'type': 'string'}}, 'required': ['code'], 'title': 'execute_code_args', 'type': 'object', 'additionalProperties': False}, on_invoke_tool=._create_function_tool.._on_invoke_tool at 0x10d8ea700>, strict_json_schema=True), WebSearchTool(user_location=None, search_context_size='medium')], mcp_servers=[], input_guardrails=[], output_guardrails=[], output_type=None, hooks=None, tool_use_behavior='run_llm_again', reset_tool_choice=True), raw_item=ResponseFunctionToolCall(arguments='{"code":"!pip install requests pandas statsmodels"}', call_id='call_HvXhf4h3CrjJ6GSrvuWhVvjf', name='execute_code', type='function_call', id='fc_67edcd45600481919d6193d8c7b83e2f0e2583e709c47d03', status='completed'), type='tool_call_item'),

ToolCallOutputItem(agent=Agent(name='Code Agent', instructions="\nYou solve tasks using an agentic coding loop in Python.\n\nFollow this loop carefully:\n\n- Think and write code. Send the code to the execute_code tool.\n- Get code output results back.\n- Think again, improve or add more code.\n- Get code output results back.\n...\n- Repeat until you've solved the problem completely.\n\nIf you encounter any errors:\n\n- FIX THEM and continue the loop.\n- If modules are not found, install them using: !pip install \n\nNever give up. Continue iterating until the task is fully solved.\nThe sandbox environment is safe, isolated, and supports running arbitrary Python code.\nState is maintained between code snippets:\nVariables and definitions persist across executions.\n# first code snippet\nx = 2\nprint(x)\n# second code snippet in separate request\ny = 6\nprint(x + y) # This works because state persists\n\nBegin your agentic coding loop!\n\n", handoff_description=None, handoffs=[], model='gpt-4o', model_settings=ModelSettings(temperature=None, top_p=None, frequency_penalty=None, presence_penalty=None, tool_choice=None, parallel_tool_calls=False, truncation=None, max_tokens=None), tools=[FunctionTool(name='execute_code', description='Execute the given Python code in a sandboxed environment and return the output.', params_json_schema={'properties': {'code': {'title': 'Code', 'type': 'string'}}, 'required': ['code'], 'title': 'execute_code_args', 'type': 'object', 'additionalProperties': False}, on_invoke_tool=._create_function_tool.._on_invoke_tool at 0x10d8ea700>, strict_json_schema=True), WebSearchTool(user_location=None, search_context_size='medium')], mcp_servers=[], input_guardrails=[], output_guardrails=[], output_type=None, hooks=None, tool_use_behavior='run_llm_again', reset_tool_choice=True), raw_item={'call_id': 'call_HvXhf4h3CrjJ6GSrvuWhVvjf', 'output': "{'stdout': 'Collecting requests\\r\\n Downloading requests-2.32.3-py3-none-any.whl.metadata (4.6 kB)\\r\\nRequirement already satisfied: pandas in /usr/local/lib/python3.11/site-packages (2.2.3)\\r\\nCollecting statsmodels\\r\\n Downloading statsmodels-0.14.4-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (9.2 kB)\\r\\nRequirement already satisfied: charset-normalizer<4,>=2 in /usr/local/lib/python3.11/site-packages (from requests) (2.1.1)\\r\\nRequirement already satisfied: idna<4,>=2.5 in /usr/local/lib/python3.11/site-packages (from requests) (3.6)\\r\\nCollecting urllib3<3,>=1.21.1 (from requests)\\r\\n Downloading urllib3-2.3.0-py3-none-any.whl.metadata (6.5 kB)\\r\\nRequirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.11/site-packages (from requests) (2024.2.2)\\r\\nRequirement already satisfied: numpy>=1.23.2 in /usr/local/lib/python3.11/site-packages (from pandas) (1.26.4)\\r\\nRequirement already satisfied: python-dateutil>=2.8.2 in /usr/local/lib/python3.11/site-packages (from pandas) (2.9.0.post0)\\r\\nRequirement already satisfied: pytz>=2020.1 in /usr/local/lib/python3.11/site-packages (from pandas) (2024.2)\\r\\nRequirement already satisfied: tzdata>=2022.7 in /usr/local/lib/python3.11/site-packages (from pandas) (2024.2)\\r\\nCollecting scipy!=1.9.2,>=1.8 (from statsmodels)\\r\\n Downloading scipy-1.15.2-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (61 kB)\\r\\n ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/62.0 kB ? eta -:--:--\\r ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 62.0/62.0 kB 172.6 MB/s eta 0:00:00\\r\\nCollecting patsy>=0.5.6 (from statsmodels)\\r\\n Downloading patsy-1.0.1-py2.py3-none-any.whl.metadata (3.3 kB)\\r\\nCollecting packaging>=21.3 (from statsmodels)\\r\\n Downloading packaging-24.2-py3-none-any.whl.metadata (3.2 kB)\\r\\nRequirement already satisfied: six>=1.5 in /usr/local/lib/python3.11/site-packages (from python-dateutil>=2.8.2->pandas) (1.16.0)\\r\\nDownloading requests-2.32.3-py3-none-any.whl (64 kB)\\r\\n ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/64.9 kB ? eta -:--:--\\r ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 64.9/64.9 kB 206.2 MB/s eta 0:00:00\\r\\nDownloading statsmodels-0.14.4-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (10.8 MB)\\r\\n ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/10.8 MB ? eta -:--:--\\r ━━━━━━━━━━━━━━━━━━━━━━━━╺━━━━━━━━━━━━━━━ 6.6/10.8 MB 196.5 MB/s eta 0:00:01\\r ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 10.8/10.8 MB 201.4 MB/s eta 0:00:00\\r\\nDownloading packaging-24.2-py3-none-any.whl (65 kB)\\r\\n ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/65.5 kB ? eta -:--:--\\r ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 65.5/65.5 kB 211.7 MB/s eta 0:00:00\\r\\nDownloading patsy-1.0.1-py2.py3-none-any.whl (232 kB)\\r\\n ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/232.9 kB ? eta -:--:--\\r ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 232.9/232.9 kB 220.5 MB/s eta 0:00:00\\r\\nDownloading scipy-1.15.2-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (37.6 MB)\\r\\n ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/37.6 MB ? eta -:--:--\\r ━━━━━╸━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 5.2/37.6 MB 157.6 MB/s eta 0:00:01\\r ━━━━━━━━━━━━╺━━━━━━━━━━━━━━━━━━━━━━━━━━━ 11.4/37.6 MB 178.8 MB/s eta 0:00:01\\r ━━━━━━━━━━━━━━━━━━╸━━━━━━━━━━━━━━━━━━━━━ 17.4/37.6 MB 176.5 MB/s eta 0:00:01\\r ━━━━━━━━━━━━━━━━━━━━━━━━╸━━━━━━━━━━━━━━━ 23.3/37.6 MB 172.6 MB/s eta 0:00:01\\r ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╺━━━━━━━━ 29.3/37.6 MB 173.3 MB/s eta 0:00:01\\r ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╸━━ 35.4/37.6 MB 175.3 MB/s eta 0:00:01\\r ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 37.6/37.6 MB 179.8 MB/s eta 0:00:00\\r\\nDownloading urllib3-2.3.0-py3-none-any.whl (128 kB)\\r\\n ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/128.4 kB ? eta -:--:--\\r ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 128.4/128.4 kB 210.0 MB/s eta 0:00:00\\r\\nInstalling collected packages: urllib3, scipy, patsy, packaging, requests, statsmodels\\r\\nSuccessfully installed packaging-24.2 patsy-1.0.1 requests-2.32.3 scipy-1.15.2 statsmodels-0.14.4 urllib3-2.3.0\\r\\n\\r\\n[notice] A new release of pip is available: 24.0 -> 25.0.1\\r\\n[notice] To update, run: pip install --upgrade pip\\r\\n', 'stderr': '', 'success': True, 'result': 'None', 'error': None}", 'type': 'function_call_output'}, output={'stdout': 'Collecting requests\r\n Downloading requests-2.32.3-py3-none-any.whl.metadata (4.6 kB)\r\nRequirement already satisfied: pandas in /usr/local/lib/python3.11/site-packages (2.2.3)\r\nCollecting statsmodels\r\n Downloading statsmodels-0.14.4-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (9.2 kB)\r\nRequirement already satisfied: charset-normalizer<4,>=2 in /usr/local/lib/python3.11/site-packages (from requests) (2.1.1)\r\nRequirement already satisfied: idna<4,>=2.5 in /usr/local/lib/python3.11/site-packages (from requests) (3.6)\r\nCollecting urllib3<3,>=1.21.1 (from requests)\r\n Downloading urllib3-2.3.0-py3-none-any.whl.metadata (6.5 kB)\r\nRequirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.11/site-packages (from requests) (2024.2.2)\r\nRequirement already satisfied: numpy>=1.23.2 in /usr/local/lib/python3.11/site-packages (from pandas) (1.26.4)\r\nRequirement already satisfied: python-dateutil>=2.8.2 in /usr/local/lib/python3.11/site-packages (from pandas) (2.9.0.post0)\r\nRequirement already satisfied: pytz>=2020.1 in /usr/local/lib/python3.11/site-packages (from pandas) (2024.2)\r\nRequirement already satisfied: tzdata>=2022.7 in /usr/local/lib/python3.11/site-packages (from pandas) (2024.2)\r\nCollecting scipy!=1.9.2,>=1.8 (from statsmodels)\r\n Downloading scipy-1.15.2-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (61 kB)\r\n ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/62.0 kB ? eta -:--:--\r ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 62.0/62.0 kB 172.6 MB/s eta 0:00:00\r\nCollecting patsy>=0.5.6 (from statsmodels)\r\n Downloading patsy-1.0.1-py2.py3-none-any.whl.metadata (3.3 kB)\r\nCollecting packaging>=21.3 (from statsmodels)\r\n Downloading packaging-24.2-py3-none-any.whl.metadata (3.2 kB)\r\nRequirement already satisfied: six>=1.5 in /usr/local/lib/python3.11/site-packages (from python-dateutil>=2.8.2->pandas) (1.16.0)\r\nDownloading requests-2.32.3-py3-none-any.whl (64 kB)\r\n ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/64.9 kB ? eta -:--:--\r ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 64.9/64.9 kB 206.2 MB/s eta 0:00:00\r\nDownloading statsmodels-0.14.4-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (10.8 MB)\r\n ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/10.8 MB ? eta -:--:--\r ━━━━━━━━━━━━━━━━━━━━━━━━╺━━━━━━━━━━━━━━━ 6.6/10.8 MB 196.5 MB/s eta 0:00:01\r ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 10.8/10.8 MB 201.4 MB/s eta 0:00:00\r\nDownloading packaging-24.2-py3-none-any.whl (65 kB)\r\n ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/65.5 kB ? eta -:--:--\r ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 65.5/65.5 kB 211.7 MB/s eta 0:00:00\r\nDownloading patsy-1.0.1-py2.py3-none-any.whl (232 kB)\r\n ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/232.9 kB ? eta -:--:--\r ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 232.9/232.9 kB 220.5 MB/s eta 0:00:00\r\nDownloading scipy-1.15.2-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (37.6 MB)\r\n ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/37.6 MB ? eta -:--:--\r ━━━━━╸━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 5.2/37.6 MB 157.6 MB/s eta 0:00:01\r ━━━━━━━━━━━━╺━━━━━━━━━━━━━━━━━━━━━━━━━━━ 11.4/37.6 MB 178.8 MB/s eta 0:00:01\r ━━━━━━━━━━━━━━━━━━╸━━━━━━━━━━━━━━━━━━━━━ 17.4/37.6 MB 176.5 MB/s eta 0:00:01\r ━━━━━━━━━━━━━━━━━━━━━━━━╸━━━━━━━━━━━━━━━ 23.3/37.6 MB 172.6 MB/s eta 0:00:01\r ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╺━━━━━━━━ 29.3/37.6 MB 173.3 MB/s eta 0:00:01\r ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╸━━ 35.4/37.6 MB 175.3 MB/s eta 0:00:01\r ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 37.6/37.6 MB 179.8 MB/s eta 0:00:00\r\nDownloading urllib3-2.3.0-py3-none-any.whl (128 kB)\r\n ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/128.4 kB ? eta -:--:--\r ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 128.4/128.4 kB 210.0 MB/s eta 0:00:00\r\nInstalling collected packages: urllib3, scipy, patsy, packaging, requests, statsmodels\r\nSuccessfully installed packaging-24.2 patsy-1.0.1 requests-2.32.3 scipy-1.15.2 statsmodels-0.14.4 urllib3-2.3.0\r\n\r\n[notice] A new release of pip is available: 24.0 -> 25.0.1\r\n[notice] To update, run: pip install --upgrade pip\r\n', 'stderr': '', 'success': True, 'result': 'None', 'error': None}, type='tool_call_output_item'),

ToolCallItem(agent=Agent(name='Code Agent', instructions="\nYou solve tasks using an agentic coding loop in Python.\n\nFollow this loop carefully:\n\n- Think and write code. Send the code to the execute_code tool.\n- Get code output results back.\n- Think again, improve or add more code.\n- Get code output results back.\n...\n- Repeat until you've solved the problem completely.\n\nIf you encounter any errors:\n\n- FIX THEM and continue the loop.\n- If modules are not found, install them using: !pip install \n\nNever give up. Continue iterating until the task is fully solved.\nThe sandbox environment is safe, isolated, and supports running arbitrary Python code.\nState is maintained between code snippets:\nVariables and definitions persist across executions.\n# first code snippet\nx = 2\nprint(x)\n# second code snippet in separate request\ny = 6\nprint(x + y) # This works because state persists\n\nBegin your agentic coding loop!\n\n", handoff_description=None, handoffs=[], model='gpt-4o', model_settings=ModelSettings(temperature=None, top_p=None, frequency_penalty=None, presence_penalty=None, tool_choice=None, parallel_tool_calls=False, truncation=None, max_tokens=None), tools=[FunctionTool(name='execute_code', description='Execute the given Python code in a sandboxed environment and return the output.', params_json_schema={'properties': {'code': {'title': 'Code', 'type': 'string'}}, 'required': ['code'], 'title': 'execute_code_args', 'type': 'object', 'additionalProperties': False}, on_invoke_tool=._create_function_tool.._on_invoke_tool at 0x10d8ea700>, strict_json_schema=True), WebSearchTool(user_location=None, search_context_size='medium')], mcp_servers=[], input_guardrails=[], output_guardrails=[], output_type=None, hooks=None, tool_use_behavior='run_llm_again', reset_tool_choice=True), raw_item=ResponseFunctionToolCall(arguments='{"code":"import requests\\nimport pandas as pd\\nfrom statsmodels.tsa.arima.model import ARIMA\\n\\n# Step 1: Retrieve Historical Weather Data\\nlatitude = 44.6488\\nlongitude = -63.5752\\nstart_date = \\"2025-02-01\\"\\nend_date = \\"2025-04-02\\"\\ntimezone = \\"America/Halifax\\"\\n\\nurl = f\\"https://archive-api.open-meteo.com/v1/archive?latitude={latitude}&longitude={longitude}&start_date={start_date}&end_date={end_date}&daily=temperature_2m_max,temperature_2m_min&timezone={timezone}\\"\\n\\nresponse = requests.get(url)\\ndata = response.json()\\n\\n# Extract dates and temperatures\\ndates = data[\'daily\'][\'time\']\\ntemp_max = data[\'daily\'][\'temperature_2m_max\']\\ntemp_min = data[\'daily\'][\'temperature_2m_min\']\\n\\n# Calculate mean temperatures\\ntemp_mean = [(max_temp + min_temp) / 2 for max_temp, min_temp in zip(temp_max, temp_min)]\\n\\n# Create a DataFrame\\ndf = pd.DataFrame({\'Date\': pd.to_datetime(dates), \'Mean_Temperature\': temp_mean})\\n\\n# Step 2: Fit a Statistical Model\\n# Set the date as the index\\ndf.set_index(\'Date\', inplace=True)\\n\\n# Fit an ARIMA model\\nmodel = ARIMA(df[\'Mean_Temperature\'], order=(5, 1, 0)) # ARIMA(p, d, q)\\nmodel_fit = model.fit()\\n\\n# Step 3: Generate Predictions\\nforecast = model_fit.forecast(steps=7)\\nforecast_dates = pd.date_range(start=df.index[-1] + pd.Timedelta(days=1), periods=7)\\n\\n# Step 4: Display Predictions in a Markdown Table\\nforecast_df = pd.DataFrame({\'Date\': forecast_dates, \'Predicted_Mean_Temperature\': forecast})\\nforecast_df.reset_index(drop=True, inplace=True)\\n\\n# Convert DataFrame to markdown table\\nmarkdown_table = forecast_df.to_markdown(index=False)\\nmarkdown_table"}', call_id='call_Beq2SEw3yusSsGCbZdJJ7KDe', name='execute_code', type='function_call', id='fc_67edcd4f10808191b0ce69a2c6c8aee10e2583e709c47d03', status='completed'), type='tool_call_item'),

ToolCallOutputItem(agent=Agent(name='Code Agent', instructions="\nYou solve tasks using an agentic coding loop in Python.\n\nFollow this loop carefully:\n\n- Think and write code. Send the code to the execute_code tool.\n- Get code output results back.\n- Think again, improve or add more code.\n- Get code output results back.\n...\n- Repeat until you've solved the problem completely.\n\nIf you encounter any errors:\n\n- FIX THEM and continue the loop.\n- If modules are not found, install them using: !pip install \n\nNever give up. Continue iterating until the task is fully solved.\nThe sandbox environment is safe, isolated, and supports running arbitrary Python code.\nState is maintained between code snippets:\nVariables and definitions persist across executions.\n# first code snippet\nx = 2\nprint(x)\n# second code snippet in separate request\ny = 6\nprint(x + y) # This works because state persists\n\nBegin your agentic coding loop!\n\n", handoff_description=None, handoffs=[], model='gpt-4o', model_settings=ModelSettings(temperature=None, top_p=None, frequency_penalty=None, presence_penalty=None, tool_choice=None, parallel_tool_calls=False, truncation=None, max_tokens=None), tools=[FunctionTool(name='execute_code', description='Execute the given Python code in a sandboxed environment and return the output.', params_json_schema={'properties': {'code': {'title': 'Code', 'type': 'string'}}, 'required': ['code'], 'title': 'execute_code_args', 'type': 'object', 'additionalProperties': False}, on_invoke_tool=._create_function_tool.._on_invoke_tool at 0x10d8ea700>, strict_json_schema=True), WebSearchTool(user_location=None, search_context_size='medium')], mcp_servers=[], input_guardrails=[], output_guardrails=[], output_type=None, hooks=None, tool_use_behavior='run_llm_again', reset_tool_choice=True), raw_item={'call_id': 'call_Beq2SEw3yusSsGCbZdJJ7KDe', 'output': '{\'stdout\': "---------------------------------------------------------------------------\\nTypeError Traceback (most recent call last)\\nFile :23\\n 20 temp_min = data[\'daily\'][\'temperature_2m_min\']\\n 22 # Calculate mean temperatures\\n---> 23 temp_mean = [(max_temp + min_temp) / 2 for max_temp, min_temp in zip(temp_max, temp_min)]\\n 25 # Create a DataFrame\\n 26 df = pd.DataFrame({\'Date\': pd.to_datetime(dates), \'Mean_Temperature\': temp_mean})\\n\\nFile :23, in (.0)\\n 20 temp_min = data[\'daily\'][\'temperature_2m_min\']\\n 22 # Calculate mean temperatures\\n---> 23 temp_mean = [(max_temp + min_temp) / 2 for max_temp, min_temp in zip(temp_max, temp_min)]\\n 25 # Create a DataFrame\\n 26 df = pd.DataFrame({\'Date\': pd.to_datetime(dates), \'Mean_Temperature\': temp_mean})\\n\\nTypeError: unsupported operand type(s) for +: \'NoneType\' and \'NoneType\'\\n", \'stderr\': \'\', \'success\': False, \'result\': None, \'error\': "unsupported operand type(s) for +: \'NoneType\' and \'NoneType\'"}', 'type': 'function_call_output'}, output={'stdout': "---------------------------------------------------------------------------\nTypeError Traceback (most recent call last)\nFile :23\n 20 temp_min = data['daily']['temperature_2m_min']\n 22 # Calculate mean temperatures\n---> 23 temp_mean = [(max_temp + min_temp) / 2 for max_temp, min_temp in zip(temp_max, temp_min)]\n 25 # Create a DataFrame\n 26 df = pd.DataFrame({'Date': pd.to_datetime(dates), 'Mean_Temperature': temp_mean})\n\nFile :23, in (.0)\n 20 temp_min = data['daily']['temperature_2m_min']\n 22 # Calculate mean temperatures\n---> 23 temp_mean = [(max_temp + min_temp) / 2 for max_temp, min_temp in zip(temp_max, temp_min)]\n 25 # Create a DataFrame\n 26 df = pd.DataFrame({'Date': pd.to_datetime(dates), 'Mean_Temperature': temp_mean})\n\nTypeError: unsupported operand type(s) for +: 'NoneType' and 'NoneType'\n", 'stderr': '', 'success': False, 'result': None, 'error': "unsupported operand type(s) for +: 'NoneType' and 'NoneType'"}, type='tool_call_output_item'),

MessageOutputItem(agent=Agent(name='Code Agent', instructions="\nYou solve tasks using an agentic coding loop in Python.\n\nFollow this loop carefully:\n\n- Think and write code. Send the code to the execute_code tool.\n- Get code output results back.\n- Think again, improve or add more code.\n- Get code output results back.\n...\n- Repeat until you've solved the problem completely.\n\nIf you encounter any errors:\n\n- FIX THEM and continue the loop.\n- If modules are not found, install them using: !pip install \n\nNever give up. Continue iterating until the task is fully solved.\nThe sandbox environment is safe, isolated, and supports running arbitrary Python code.\nState is maintained between code snippets:\nVariables and definitions persist across executions.\n# first code snippet\nx = 2\nprint(x)\n# second code snippet in separate request\ny = 6\nprint(x + y) # This works because state persists\n\nBegin your agentic coding loop!\n\n", handoff_description=None, handoffs=[], model='gpt-4o', model_settings=ModelSettings(temperature=None, top_p=None, frequency_penalty=None, presence_penalty=None, tool_choice=None, parallel_tool_calls=False, truncation=None, max_tokens=None), tools=[FunctionTool(name='execute_code', description='Execute the given Python code in a sandboxed environment and return the output.', params_json_schema={'properties': {'code': {'title': 'Code', 'type': 'string'}}, 'required': ['code'], 'title': 'execute_code_args', 'type': 'object', 'additionalProperties': False}, on_invoke_tool=._create_function_tool.._on_invoke_tool at 0x10d8ea700>, strict_json_schema=True), WebSearchTool(user_location=None, search_context_size='medium')], mcp_servers=[], input_guardrails=[], output_guardrails=[], output_type=None, hooks=None, tool_use_behavior='run_llm_again', reset_tool_choice=True), raw_item=ResponseOutputMessage(id='msg_67edcd54c7548191a2dda9de5b3a36960e2583e709c47d03', content=[ResponseOutputText(annotations=[], text="The error indicates that some of the temperature values are `None`. This can happen if the API returns missing data for certain days. To address this, we should filter out any `None` values before calculating the mean temperatures.\n\nLet's update the code to handle this situation and re-run the analysis.", type='output_text')], role='assistant', status='completed', type='message'), type='message_output_item'),

ToolCallItem(agent=Agent(name='Code Agent', instructions="\nYou solve tasks using an agentic coding loop in Python.\n\nFollow this loop carefully:\n\n- Think and write code. Send the code to the execute_code tool.\n- Get code output results back.\n- Think again, improve or add more code.\n- Get code output results back.\n...\n- Repeat until you've solved the problem completely.\n\nIf you encounter any errors:\n\n- FIX THEM and continue the loop.\n- If modules are not found, install them using: !pip install \n\nNever give up. Continue iterating until the task is fully solved.\nThe sandbox environment is safe, isolated, and supports running arbitrary Python code.\nState is maintained between code snippets:\nVariables and definitions persist across executions.\n# first code snippet\nx = 2\nprint(x)\n# second code snippet in separate request\ny = 6\nprint(x + y) # This works because state persists\n\nBegin your agentic coding loop!\n\n", handoff_description=None, handoffs=[], model='gpt-4o', model_settings=ModelSettings(temperature=None, top_p=None, frequency_penalty=None, presence_penalty=None, tool_choice=None, parallel_tool_calls=False, truncation=None, max_tokens=None), tools=[FunctionTool(name='execute_code', description='Execute the given Python code in a sandboxed environment and return the output.', params_json_schema={'properties': {'code': {'title': 'Code', 'type': 'string'}}, 'required': ['code'], 'title': 'execute_code_args', 'type': 'object', 'additionalProperties': False}, on_invoke_tool=._create_function_tool.._on_invoke_tool at 0x10d8ea700>, strict_json_schema=True), WebSearchTool(user_location=None, search_context_size='medium')], mcp_servers=[], input_guardrails=[], output_guardrails=[], output_type=None, hooks=None, tool_use_behavior='run_llm_again', reset_tool_choice=True), raw_item=ResponseFunctionToolCall(arguments='{"code":"import requests\\nimport pandas as pd\\nfrom statsmodels.tsa.arima.model import ARIMA\\n\\n# Step 1: Retrieve Historical Weather Data\\nlatitude = 44.6488\\nlongitude = -63.5752\\nstart_date = \\"2025-02-01\\"\\nend_date = \\"2025-04-02\\"\\ntimezone = \\"America/Halifax\\"\\n\\nurl = f\\"https://archive-api.open-meteo.com/v1/archive?latitude={latitude}&longitude={longitude}&start_date={start_date}&end_date={end_date}&daily=temperature_2m_max,temperature_2m_min&timezone={timezone}\\"\\n\\nresponse = requests.get(url)\\ndata = response.json()\\n\\n# Extract dates and temperatures\\ndates = data[\'daily\'][\'time\']\\ntemp_max = data[\'daily\'][\'temperature_2m_max\']\\ntemp_min = data[\'daily\'][\'temperature_2m_min\']\\n\\n# Calculate mean temperatures, excluding None values\\ntemp_mean = []\\nfor max_temp, min_temp in zip(temp_max, temp_min):\\n if max_temp is not None and min_temp is not None:\\n temp_mean.append((max_temp + min_temp) / 2)\\n else:\\n temp_mean.append(None)\\n\\n# Remove None values\\nvalid_temp_mean = [temp for temp in temp_mean if temp is not None]\\nvalid_dates = [date for temp, date in zip(temp_mean, dates) if temp is not None]\\n\\n# Create a DataFrame\\ndf = pd.DataFrame({\'Date\': pd.to_datetime(valid_dates), \'Mean_Temperature\': valid_temp_mean})\\n\\n# Step 2: Fit a Statistical Model\\n# Set the date as the index\\ndf.set_index(\'Date\', inplace=True)\\n\\n# Fit an ARIMA model\\nmodel = ARIMA(df[\'Mean_Temperature\'], order=(5, 1, 0))\\nmodel_fit = model.fit()\\n\\n# Step 3: Generate Predictions\\nforecast = model_fit.forecast(steps=7)\\nforecast_dates = pd.date_range(start=df.index[-1] + pd.Timedelta(days=1), periods=7)\\n\\n# Step 4: Display Predictions in a Markdown Table\\nforecast_df = pd.DataFrame({\'Date\': forecast_dates, \'Predicted_Mean_Temperature\': forecast})\\nforecast_df.reset_index(drop=True, inplace=True)\\n\\n# Convert DataFrame to markdown table\\nmarkdown_table = forecast_df.to_markdown(index=False)\\nmarkdown_table"}', call_id='call_QrNg66gRdebVc3jSr6eK4hPw', name='execute_code', type='function_call', id='fc_67edcd5691a48191920729697883e5110e2583e709c47d03', status='completed'), type='tool_call_item'),

ToolCallOutputItem(agent=Agent(name='Code Agent', instructions="\nYou solve tasks using an agentic coding loop in Python.\n\nFollow this loop carefully:\n\n- Think and write code. Send the code to the execute_code tool.\n- Get code output results back.\n- Think again, improve or add more code.\n- Get code output results back.\n...\n- Repeat until you've solved the problem completely.\n\nIf you encounter any errors:\n\n- FIX THEM and continue the loop.\n- If modules are not found, install them using: !pip install \n\nNever give up. Continue iterating until the task is fully solved.\nThe sandbox environment is safe, isolated, and supports running arbitrary Python code.\nState is maintained between code snippets:\nVariables and definitions persist across executions.\n# first code snippet\nx = 2\nprint(x)\n# second code snippet in separate request\ny = 6\nprint(x + y) # This works because state persists\n\nBegin your agentic coding loop!\n\n", handoff_description=None, handoffs=[], model='gpt-4o', model_settings=ModelSettings(temperature=None, top_p=None, frequency_penalty=None, presence_penalty=None, tool_choice=None, parallel_tool_calls=False, truncation=None, max_tokens=None), tools=[FunctionTool(name='execute_code', description='Execute the given Python code in a sandboxed environment and return the output.', params_json_schema={'properties': {'code': {'title': 'Code', 'type': 'string'}}, 'required': ['code'], 'title': 'execute_code_args', 'type': 'object', 'additionalProperties': False}, on_invoke_tool=._create_function_tool.._on_invoke_tool at 0x10d8ea700>, strict_json_schema=True), WebSearchTool(user_location=None, search_context_size='medium')], mcp_servers=[], input_guardrails=[], output_guardrails=[], output_type=None, hooks=None, tool_use_behavior='run_llm_again', reset_tool_choice=True), raw_item={'call_id': 'call_QrNg66gRdebVc3jSr6eK4hPw', 'output': '{\'stdout\': \'---------------------------------------------------------------------------\\nModuleNotFoundError Traceback (most recent call last)\\nFile /usr/local/lib/python3.11/site-packages/pandas/compat/_optional.py:135, in import_optional_dependency(name, extra, errors, min_version)\\n 134 try:\\n--> 135 module = importlib.import_module(name)\\n 136 except ImportError:\\n\\nFile /usr/local/lib/python3.11/importlib/__init__.py:126, in import_module(name, package)\\n 125 level += 1\\n--> 126 return _bootstrap._gcd_import(name[level:], package, level)\\n\\nFile :1206, in _gcd_import(name, package, level)\\n\\nFile :1178, in _find_and_load(name, import_)\\n\\nFile :1142, in _find_and_load_unlocked(name, import_)\\n\\nModuleNotFoundError: No module named \\\'tabulate\\\'\\n\\nDuring handling of the above exception, another exception occurred:\\n\\nImportError Traceback (most recent call last)\\nFile :54\\n 51 forecast_df.reset_index(drop=True, inplace=True)\\n 53 # Convert DataFrame to markdown table\\n---> 54 markdown_table = forecast_df.to_markdown(index=False)\\n 55 markdown_table\\n\\nFile /usr/local/lib/python3.11/site-packages/pandas/util/_decorators.py:333, in deprecate_nonkeyword_arguments..decorate..wrapper(*args, **kwargs)\\n 327 if len(args) > num_allow_args:\\n 328 warnings.warn(\\n 329 msg.format(arguments=_format_argument_list(allow_args)),\\n 330 FutureWarning,\\n 331 stacklevel=find_stack_level(),\\n 332 )\\n--> 333 return func(*args, **kwargs)\\n\\nFile /usr/local/lib/python3.11/site-packages/pandas/core/frame.py:2983, in DataFrame.to_markdown(self, buf, mode, index, storage_options, **kwargs)\\n 2981 kwargs.setdefault("tablefmt", "pipe")\\n 2982 kwargs.setdefault("showindex", index)\\n-> 2983 tabulate = import_optional_dependency("tabulate")\\n 2984 result = tabulate.tabulate(self, **kwargs)\\n 2985 if buf is None:\\n\\nFile /usr/local/lib/python3.11/site-packages/pandas/compat/_optional.py:138, in import_optional_dependency(name, extra, errors, min_version)\\n 136 except ImportError:\\n 137 if errors == "raise":\\n--> 138 raise ImportError(msg)\\n 139 return None\\n 141 # Handle submodules: if we have submodule, grab parent module from sys.modules\\n\\nImportError: Missing optional dependency \\\'tabulate\\\'. Use pip or conda to install tabulate.\\n\', \'stderr\': \'/usr/local/lib/python3.11/site-packages/statsmodels/tsa/base/tsa_model.py:473: ValueWarning: No frequency information was provided, so inferred frequency D will be used.\\n self._init_dates(dates, freq)\\n/usr/local/lib/python3.11/site-packages/statsmodels/tsa/base/tsa_model.py:473: ValueWarning: No frequency information was provided, so inferred frequency D will be used.\\n self._init_dates(dates, freq)\\n/usr/local/lib/python3.11/site-packages/statsmodels/tsa/base/tsa_model.py:473: ValueWarning: No frequency information was provided, so inferred frequency D will be used.\\n self._init_dates(dates, freq)\\n\', \'success\': False, \'result\': None, \'error\': "Missing optional dependency \'tabulate\'. Use pip or conda to install tabulate."}', 'type': 'function_call_output'}, output={'stdout': '---------------------------------------------------------------------------\nModuleNotFoundError Traceback (most recent call last)\nFile /usr/local/lib/python3.11/site-packages/pandas/compat/_optional.py:135, in import_optional_dependency(name, extra, errors, min_version)\n 134 try:\n--> 135 module = importlib.import_module(name)\n 136 except ImportError:\n\nFile /usr/local/lib/python3.11/importlib/__init__.py:126, in import_module(name, package)\n 125 level += 1\n--> 126 return _bootstrap._gcd_import(name[level:], package, level)\n\nFile :1206, in _gcd_import(name, package, level)\n\nFile :1178, in _find_and_load(name, import_)\n\nFile :1142, in _find_and_load_unlocked(name, import_)\n\nModuleNotFoundError: No module named \'tabulate\'\n\nDuring handling of the above exception, another exception occurred:\n\nImportError Traceback (most recent call last)\nFile :54\n 51 forecast_df.reset_index(drop=True, inplace=True)\n 53 # Convert DataFrame to markdown table\n---> 54 markdown_table = forecast_df.to_markdown(index=False)\n 55 markdown_table\n\nFile /usr/local/lib/python3.11/site-packages/pandas/util/_decorators.py:333, in deprecate_nonkeyword_arguments..decorate..wrapper(*args, **kwargs)\n 327 if len(args) > num_allow_args:\n 328 warnings.warn(\n 329 msg.format(arguments=_format_argument_list(allow_args)),\n 330 FutureWarning,\n 331 stacklevel=find_stack_level(),\n 332 )\n--> 333 return func(*args, **kwargs)\n\nFile /usr/local/lib/python3.11/site-packages/pandas/core/frame.py:2983, in DataFrame.to_markdown(self, buf, mode, index, storage_options, **kwargs)\n 2981 kwargs.setdefault("tablefmt", "pipe")\n 2982 kwargs.setdefault("showindex", index)\n-> 2983 tabulate = import_optional_dependency("tabulate")\n 2984 result = tabulate.tabulate(self, **kwargs)\n 2985 if buf is None:\n\nFile /usr/local/lib/python3.11/site-packages/pandas/compat/_optional.py:138, in import_optional_dependency(name, extra, errors, min_version)\n 136 except ImportError:\n 137 if errors == "raise":\n--> 138 raise ImportError(msg)\n 139 return None\n 141 # Handle submodules: if we have submodule, grab parent module from sys.modules\n\nImportError: Missing optional dependency \'tabulate\'. Use pip or conda to install tabulate.\n', 'stderr': '/usr/local/lib/python3.11/site-packages/statsmodels/tsa/base/tsa_model.py:473: ValueWarning: No frequency information was provided, so inferred frequency D will be used.\n self._init_dates(dates, freq)\n/usr/local/lib/python3.11/site-packages/statsmodels/tsa/base/tsa_model.py:473: ValueWarning: No frequency information was provided, so inferred frequency D will be used.\n self._init_dates(dates, freq)\n/usr/local/lib/python3.11/site-packages/statsmodels/tsa/base/tsa_model.py:473: ValueWarning: No frequency information was provided, so inferred frequency D will be used.\n self._init_dates(dates, freq)\n', 'success': False, 'result': None, 'error': "Missing optional dependency 'tabulate'. Use pip or conda to install tabulate."}, type='tool_call_output_item'),

ToolCallItem(agent=Agent(name='Code Agent', instructions="\nYou solve tasks using an agentic coding loop in Python.\n\nFollow this loop carefully:\n\n- Think and write code. Send the code to the execute_code tool.\n- Get code output results back.\n- Think again, improve or add more code.\n- Get code output results back.\n...\n- Repeat until you've solved the problem completely.\n\nIf you encounter any errors:\n\n- FIX THEM and continue the loop.\n- If modules are not found, install them using: !pip install \n\nNever give up. Continue iterating until the task is fully solved.\nThe sandbox environment is safe, isolated, and supports running arbitrary Python code.\nState is maintained between code snippets:\nVariables and definitions persist across executions.\n# first code snippet\nx = 2\nprint(x)\n# second code snippet in separate request\ny = 6\nprint(x + y) # This works because state persists\n\nBegin your agentic coding loop!\n\n", handoff_description=None, handoffs=[], model='gpt-4o', model_settings=ModelSettings(temperature=None, top_p=None, frequency_penalty=None, presence_penalty=None, tool_choice=None, parallel_tool_calls=False, truncation=None, max_tokens=None), tools=[FunctionTool(name='execute_code', description='Execute the given Python code in a sandboxed environment and return the output.', params_json_schema={'properties': {'code': {'title': 'Code', 'type': 'string'}}, 'required': ['code'], 'title': 'execute_code_args', 'type': 'object', 'additionalProperties': False}, on_invoke_tool=._create_function_tool.._on_invoke_tool at 0x10d8ea700>, strict_json_schema=True), WebSearchTool(user_location=None, search_context_size='medium')], mcp_servers=[], input_guardrails=[], output_guardrails=[], output_type=None, hooks=None, tool_use_behavior='run_llm_again', reset_tool_choice=True), raw_item=ResponseFunctionToolCall(arguments='{"code":"!pip install tabulate"}', call_id='call_2saMOvIPSQs9ATZ72S5vNOWN', name='execute_code', type='function_call', id='fc_67edcd5d4ad08191a151d5e634e22e900e2583e709c47d03', status='completed'), type='tool_call_item'),

ToolCallOutputItem(agent=Agent(name='Code Agent', instructions="\nYou solve tasks using an agentic coding loop in Python.\n\nFollow this loop carefully:\n\n- Think and write code. Send the code to the execute_code tool.\n- Get code output results back.\n- Think again, improve or add more code.\n- Get code output results back.\n...\n- Repeat until you've solved the problem completely.\n\nIf you encounter any errors:\n\n- FIX THEM and continue the loop.\n- If modules are not found, install them using: !pip install \n\nNever give up. Continue iterating until the task is fully solved.\nThe sandbox environment is safe, isolated, and supports running arbitrary Python code.\nState is maintained between code snippets:\nVariables and definitions persist across executions.\n# first code snippet\nx = 2\nprint(x)\n# second code snippet in separate request\ny = 6\nprint(x + y) # This works because state persists\n\nBegin your agentic coding loop!\n\n", handoff_description=None, handoffs=[], model='gpt-4o', model_settings=ModelSettings(temperature=None, top_p=None, frequency_penalty=None, presence_penalty=None, tool_choice=None, parallel_tool_calls=False, truncation=None, max_tokens=None), tools=[FunctionTool(name='execute_code', description='Execute the given Python code in a sandboxed environment and return the output.', params_json_schema={'properties': {'code': {'title': 'Code', 'type': 'string'}}, 'required': ['code'], 'title': 'execute_code_args', 'type': 'object', 'additionalProperties': False}, on_invoke_tool=._create_function_tool.._on_invoke_tool at 0x10d8ea700>, strict_json_schema=True), WebSearchTool(user_location=None, search_context_size='medium')], mcp_servers=[], input_guardrails=[], output_guardrails=[], output_type=None, hooks=None, tool_use_behavior='run_llm_again', reset_tool_choice=True), raw_item={'call_id': 'call_2saMOvIPSQs9ATZ72S5vNOWN', 'output': "{'stdout': 'Collecting tabulate\\r\\n Downloading tabulate-0.9.0-py3-none-any.whl.metadata (34 kB)\\r\\nDownloading tabulate-0.9.0-py3-none-any.whl (35 kB)\\r\\nInstalling collected packages: tabulate\\r\\nSuccessfully installed tabulate-0.9.0\\r\\n\\r\\n[notice] A new release of pip is available: 24.0 -> 25.0.1\\r\\n[notice] To update, run: pip install --upgrade pip\\r\\n', 'stderr': '', 'success': True, 'result': 'None', 'error': None}", 'type': 'function_call_output'}, output={'stdout': 'Collecting tabulate\r\n Downloading tabulate-0.9.0-py3-none-any.whl.metadata (34 kB)\r\nDownloading tabulate-0.9.0-py3-none-any.whl (35 kB)\r\nInstalling collected packages: tabulate\r\nSuccessfully installed tabulate-0.9.0\r\n\r\n[notice] A new release of pip is available: 24.0 -> 25.0.1\r\n[notice] To update, run: pip install --upgrade pip\r\n', 'stderr': '', 'success': True, 'result': 'None', 'error': None}, type='tool_call_output_item'),

ToolCallItem(agent=Agent(name='Code Agent', instructions="\nYou solve tasks using an agentic coding loop in Python.\n\nFollow this loop carefully:\n\n- Think and write code. Send the code to the execute_code tool.\n- Get code output results back.\n- Think again, improve or add more code.\n- Get code output results back.\n...\n- Repeat until you've solved the problem completely.\n\nIf you encounter any errors:\n\n- FIX THEM and continue the loop.\n- If modules are not found, install them using: !pip install \n\nNever give up. Continue iterating until the task is fully solved.\nThe sandbox environment is safe, isolated, and supports running arbitrary Python code.\nState is maintained between code snippets:\nVariables and definitions persist across executions.\n# first code snippet\nx = 2\nprint(x)\n# second code snippet in separate request\ny = 6\nprint(x + y) # This works because state persists\n\nBegin your agentic coding loop!\n\n", handoff_description=None, handoffs=[], model='gpt-4o', model_settings=ModelSettings(temperature=None, top_p=None, frequency_penalty=None, presence_penalty=None, tool_choice=None, parallel_tool_calls=False, truncation=None, max_tokens=None), tools=[FunctionTool(name='execute_code', description='Execute the given Python code in a sandboxed environment and return the output.', params_json_schema={'properties': {'code': {'title': 'Code', 'type': 'string'}}, 'required': ['code'], 'title': 'execute_code_args', 'type': 'object', 'additionalProperties': False}, on_invoke_tool=._create_function_tool.._on_invoke_tool at 0x10d8ea700>, strict_json_schema=True), WebSearchTool(user_location=None, search_context_size='medium')], mcp_servers=[], input_guardrails=[], output_guardrails=[], output_type=None, hooks=None, tool_use_behavior='run_llm_again', reset_tool_choice=True), raw_item=ResponseFunctionToolCall(arguments='{"code":"import requests\\nimport pandas as pd\\nfrom statsmodels.tsa.arima.model import ARIMA\\n\\n# Step 1: Retrieve Historical Weather Data\\nlatitude = 44.6488\\nlongitude = -63.5752\\nstart_date = \\"2025-02-01\\"\\nend_date = \\"2025-04-02\\"\\ntimezone = \\"America/Halifax\\"\\n\\nurl = f\\"https://archive-api.open-meteo.com/v1/archive?latitude={latitude}&longitude={longitude}&start_date={start_date}&end_date={end_date}&daily=temperature_2m_max,temperature_2m_min&timezone={timezone}\\"\\n\\nresponse = requests.get(url)\\ndata = response.json()\\n\\n# Extract dates and temperatures\\ndates = data[\'daily\'][\'time\']\\ntemp_max = data[\'daily\'][\'temperature_2m_max\']\\ntemp_min = data[\'daily\'][\'temperature_2m_min\']\\n\\n# Calculate mean temperatures, excluding None values\\ntemp_mean = []\\nfor max_temp, min_temp in zip(temp_max, temp_min):\\n if max_temp is not None and min_temp is not None:\\n temp_mean.append((max_temp + min_temp) / 2)\\n else:\\n temp_mean.append(None)\\n\\n# Remove None values\\nvalid_temp_mean = [temp for temp in temp_mean if temp is not None]\\nvalid_dates = [date for temp, date in zip(temp_mean, dates) if temp is not None]\\n\\n# Create a DataFrame\\ndf = pd.DataFrame({\'Date\': pd.to_datetime(valid_dates), \'Mean_Temperature\': valid_temp_mean})\\n\\n# Step 2: Fit a Statistical Model\\n# Set the date as the index\\ndf.set_index(\'Date\', inplace=True)\\n\\n# Fit an ARIMA model\\nmodel = ARIMA(df[\'Mean_Temperature\'], order=(5, 1, 0))\\nmodel_fit = model.fit()\\n\\n# Step 3: Generate Predictions\\nforecast = model_fit.forecast(steps=7)\\nforecast_dates = pd.date_range(start=df.index[-1] + pd.Timedelta(days=1), periods=7)\\n\\n# Step 4: Display Predictions in a Markdown Table\\nforecast_df = pd.DataFrame({\'Date\': forecast_dates, \'Predicted_Mean_Temperature\': forecast})\\nforecast_df.reset_index(drop=True, inplace=True)\\n\\n# Convert DataFrame to markdown table\\nmarkdown_table = forecast_df.to_markdown(index=False)\\nmarkdown_table"}', call_id='call_jLN6sUobJsXryX5lvfZ6uisV', name='execute_code', type='function_call', id='fc_67edcd5fd89481919fdac5c5368681160e2583e709c47d03', status='completed'), type='tool_call_item'),

ToolCallOutputItem(agent=Agent(name='Code Agent', instructions="\nYou solve tasks using an agentic coding loop in Python.\n\nFollow this loop carefully:\n\n- Think and write code. Send the code to the execute_code tool.\n- Get code output results back.\n- Think again, improve or add more code.\n- Get code output results back.\n...\n- Repeat until you've solved the problem completely.\n\nIf you encounter any errors:\n\n- FIX THEM and continue the loop.\n- If modules are not found, install them using: !pip install \n\nNever give up. Continue iterating until the task is fully solved.\nThe sandbox environment is safe, isolated, and supports running arbitrary Python code.\nState is maintained between code snippets:\nVariables and definitions persist across executions.\n# first code snippet\nx = 2\nprint(x)\n# second code snippet in separate request\ny = 6\nprint(x + y) # This works because state persists\n\nBegin your agentic coding loop!\n\n", handoff_description=None, handoffs=[], model='gpt-4o', model_settings=ModelSettings(temperature=None, top_p=None, frequency_penalty=None, presence_penalty=None, tool_choice=None, parallel_tool_calls=False, truncation=None, max_tokens=None), tools=[FunctionTool(name='execute_code', description='Execute the given Python code in a sandboxed environment and return the output.', params_json_schema={'properties': {'code': {'title': 'Code', 'type': 'string'}}, 'required': ['code'], 'title': 'execute_code_args', 'type': 'object', 'additionalProperties': False}, on_invoke_tool=._create_function_tool.._on_invoke_tool at 0x10d8ea700>, strict_json_schema=True), WebSearchTool(user_location=None, search_context_size='medium')], mcp_servers=[], input_guardrails=[], output_guardrails=[], output_type=None, hooks=None, tool_use_behavior='run_llm_again', reset_tool_choice=True), raw_item={'call_id': 'call_jLN6sUobJsXryX5lvfZ6uisV', 'output': '{\'stdout\': "Out[1]: \'| Date | Predicted_Mean_Temperature |\\\\n|:--------------------|-----------------------------:|\\\\n| 2025-04-01 00:00:00 | 3.30113 |\\\\n| 2025-04-02 00:00:00 | 2.24348 |\\\\n| 2025-04-03 00:00:00 | 2.5076 |\\\\n| 2025-04-04 00:00:00 | 2.32606 |\\\\n| 2025-04-05 00:00:00 | 2.01458 |\\\\n| 2025-04-06 00:00:00 | 2.52581 |\\\\n| 2025-04-07 00:00:00 | 2.66123 |\'\\n", \'stderr\': \'/usr/local/lib/python3.11/site-packages/statsmodels/tsa/base/tsa_model.py:473: ValueWarning: No frequency information was provided, so inferred frequency D will be used.\\n self._init_dates(dates, freq)\\n/usr/local/lib/python3.11/site-packages/statsmodels/tsa/base/tsa_model.py:473: ValueWarning: No frequency information was provided, so inferred frequency D will be used.\\n self._init_dates(dates, freq)\\n/usr/local/lib/python3.11/site-packages/statsmodels/tsa/base/tsa_model.py:473: ValueWarning: No frequency information was provided, so inferred frequency D will be used.\\n self._init_dates(dates, freq)\\n\', \'success\': True, \'result\': "\'| Date | Predicted_Mean_Temperature |\\\\n|:--------------------|-----------------------------:|\\\\n| 2025-04-01 00:00:00 | 3.30113 |\\\\n| 2025-04-02 00:00:00 | 2.24348 |\\\\n| 2025-04-03 00:00:00 | 2.5076 |\\\\n| 2025-04-04 00:00:00 | 2.32606 |\\\\n| 2025-04-05 00:00:00 | 2.01458 |\\\\n| 2025-04-06 00:00:00 | 2.52581 |\\\\n| 2025-04-07 00:00:00 | 2.66123 |\'", \'error\': None}', 'type': 'function_call_output'}, output={'stdout': "Out[1]: '| Date | Predicted_Mean_Temperature |\\n|:--------------------|-----------------------------:|\\n| 2025-04-01 00:00:00 | 3.30113 |\\n| 2025-04-02 00:00:00 | 2.24348 |\\n| 2025-04-03 00:00:00 | 2.5076 |\\n| 2025-04-04 00:00:00 | 2.32606 |\\n| 2025-04-05 00:00:00 | 2.01458 |\\n| 2025-04-06 00:00:00 | 2.52581 |\\n| 2025-04-07 00:00:00 | 2.66123 |'\n", 'stderr': '/usr/local/lib/python3.11/site-packages/statsmodels/tsa/base/tsa_model.py:473: ValueWarning: No frequency information was provided, so inferred frequency D will be used.\n self._init_dates(dates, freq)\n/usr/local/lib/python3.11/site-packages/statsmodels/tsa/base/tsa_model.py:473: ValueWarning: No frequency information was provided, so inferred frequency D will be used.\n self._init_dates(dates, freq)\n/usr/local/lib/python3.11/site-packages/statsmodels/tsa/base/tsa_model.py:473: ValueWarning: No frequency information was provided, so inferred frequency D will be used.\n self._init_dates(dates, freq)\n', 'success': True, 'result': "'| Date | Predicted_Mean_Temperature |\\n|:--------------------|-----------------------------:|\\n| 2025-04-01 00:00:00 | 3.30113 |\\n| 2025-04-02 00:00:00 | 2.24348 |\\n| 2025-04-03 00:00:00 | 2.5076 |\\n| 2025-04-04 00:00:00 | 2.32606 |\\n| 2025-04-05 00:00:00 | 2.01458 |\\n| 2025-04-06 00:00:00 | 2.52581 |\\n| 2025-04-07 00:00:00 | 2.66123 |'", 'error': None}, type='tool_call_output_item'),

MessageOutputItem(agent=Agent(name='Code Agent', instructions="\nYou solve tasks using an agentic coding loop in Python.\n\nFollow this loop carefully:\n\n- Think and write code. Send the code to the execute_code tool.\n- Get code output results back.\n- Think again, improve or add more code.\n- Get code output results back.\n...\n- Repeat until you've solved the problem completely.\n\nIf you encounter any errors:\n\n- FIX THEM and continue the loop.\n- If modules are not found, install them using: !pip install \n\nNever give up. Continue iterating until the task is fully solved.\nThe sandbox environment is safe, isolated, and supports running arbitrary Python code.\nState is maintained between code snippets:\nVariables and definitions persist across executions.\n# first code snippet\nx = 2\nprint(x)\n# second code snippet in separate request\ny = 6\nprint(x + y) # This works because state persists\n\nBegin your agentic coding loop!\n\n", handoff_description=None, handoffs=[], model='gpt-4o', model_settings=ModelSettings(temperature=None, top_p=None, frequency_penalty=None, presence_penalty=None, tool_choice=None, parallel_tool_calls=False, truncation=None, max_tokens=None), tools=[FunctionTool(name='execute_code', description='Execute the given Python code in a sandboxed environment and return the output.', params_json_schema={'properties': {'code': {'title': 'Code', 'type': 'string'}}, 'required': ['code'], 'title': 'execute_code_args', 'type': 'object', 'additionalProperties': False}, on_invoke_tool=._create_function_tool.._on_invoke_tool at 0x10d8ea700>, strict_json_schema=True), WebSearchTool(user_location=None, search_context_size='medium')], mcp_servers=[], input_guardrails=[], output_guardrails=[], output_type=None, hooks=None, tool_use_behavior='run_llm_again', reset_tool_choice=True), raw_item=ResponseOutputMessage(id='msg_67edcd652b6c8191a4f014b972412d150e2583e709c47d03', content=[ResponseOutputText(annotations=[], text="Here's the markdown table with the predicted mean temperatures for Halifax, Nova Scotia, from April 1 to April 7, 2025:\n\n```markdown\n| Date | Predicted_Mean_Temperature |\n|:--------------------|-----------------------------:|\n| 2025-04-01 00:00:00 | 3.30113 |\n| 2025-04-02 00:00:00 | 2.24348 |\n| 2025-04-03 00:00:00 | 2.5076 |\n| 2025-04-04 00:00:00 | 2.32606 |\n| 2025-04-05 00:00:00 | 2.01458 |\n| 2025-04-06 00:00:00 | 2.52581 |\n| 2025-04-07 00:00:00 | 2.66123 |\n```\n\nThese predictions were generated using an ARIMA model based on the historical temperature data.", type='output_text')], role='assistant', status='completed', type='message'), type='message_output_item')]